Meta platforms showed hundreds of "nudify" deepfake ads, CBS News finds

Meta has removed a number of ads promoting "nudify" apps — AI tools used to create sexually explicit deepfakes using images of real people — after a CBS News investigation found hundreds of such advertisements on its platforms.

"We have strict rules against non-consensual intimate imagery; we removed these ads, deleted the Pages responsible for running them and permanently blocked the URLs associated with these apps," a Meta spokesperson told CBS News in an emailed statement.

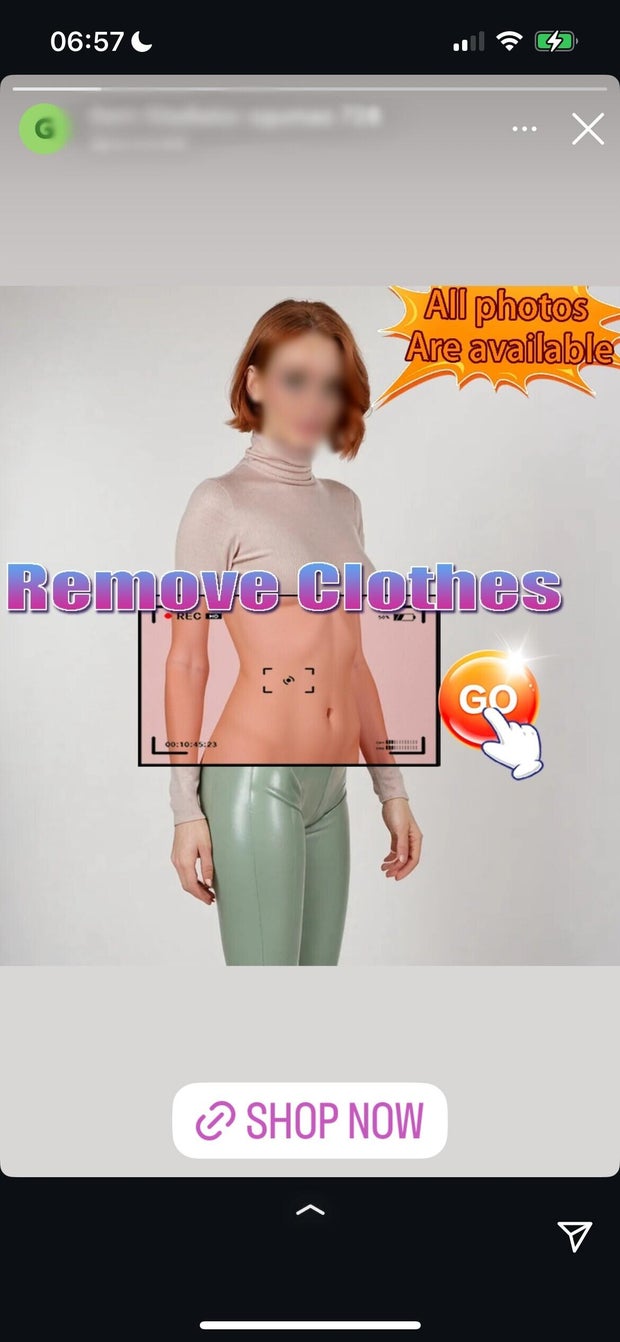

CBS News uncovered dozens of those ads on Meta's Instagram platform, in its "Stories" feature, promoting AI tools that, in many cases, advertised the ability to "upload a photo" and "see anyone naked." Other ads in Instagram's Stories promoted the ability to upload and manipulate videos of real people. One promotional ad even read "how is this filter even allowed?" as text underneath an example of a nude deepfake.

One ad promoted its AI product by using highly sexualized, underwear-clad deepfake images of actors Scarlett Johansson and Anne Hathaway. Some of the ads ads' URL links redirect to websites that promote the ability to animate real people's images and get them to perform sex acts. And some of the applications charged users between $20 and $80 to access these "exclusive" and "advance" features. In other cases, an ad's URL redirected users to Apple's app store, where "nudify" apps were available to download.

An analysis of the advertisements in Meta's ad library found that there were, at a minimum, hundreds of these ads available across the company's social media platforms, including on Facebook, Instagram, Threads, the Facebook Messenger application and Meta Audience Network — a platform that allows Meta advertisers to reach users on mobile apps and websites that partner with the company.

According to Meta's own Ad Library data, many of these ads were specifically targeted at men between the ages of 18 and 65, and were active in the United States, European Union and United Kingdom.

A Meta spokesperson told CBS News the spread of this sort of AI-generated content is an ongoing problem and they are facing increasingly sophisticated challenges in trying to combat it.

"The people behind these exploitative apps constantly evolve their tactics to evade detection, so we're continuously working to strengthen our enforcement," a Meta spokesperson said.

CBS News found that ads for "nudify" deepfake tools were still available on the company's Instagram platform even after Meta had removed those initially flagged.

Deepfakes are manipulated images, audio recordings, or videos of real people that have been altered with artificial intelligence to misrepresent someone as saying or doing something that the person did not actually say or do.

Last month, President Trump signed into law the bipartisan "Take It Down Act," which, among other things, requires websites and social media companies to remove deepfake content within 48 hours of notice from a victim.

Although the law makes it illegal to "knowingly publish" or threaten to publish intimate images without a person's consent, including AI-created deepfakes, it does not target the tools used to create such AI-generated content.

Those tools do violate platform safety and moderation rules implemented by both Apple and Meta on their respective platforms.

Meta's advertising standards policy says, "ads must not contain adult nudity and sexual activity. This includes nudity, depictions of people in explicit or sexually suggestive positions, or activities that are sexually suggestive."

Under Meta's "bullying and harassment" policy, the company also prohibits "derogatory sexualized photoshop or drawings" on its platforms. The company says its regulations are intended to block users from sharing or threatening to share nonconsensual intimate imagery.

Apple's guidelines for its app store explicitly state that "content that is offensive, insensitive, upsetting, intended to disgust, in exceptionally poor taste, or just plain creepy" is banned.

Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell University's tech research center, has been studying the surge in AI deepfake networks marketing on social platforms for more than a year. He told CBS News in a phone interview on Tuesday that he'd seen thousands more of these ads across Meta platforms, as well as on platforms such as X and Telegram, during that period.

Although Telegram and X have what he described as a structural "lawlessness" that allows for this sort of content, he believes Meta's leadership lacks the will to address the issue, despite having content moderators in place.

"I do think that trust and safety teams at these companies care. I don't think, frankly, that they care at the very top of the company in Meta's case," he said. "They're clearly under-resourcing the teams that have to fight this stuff, because as sophisticated as these [deepfake] networks are … they don't have Meta money to throw at it."

Mantzarlis also said that he found in his research that "nudify" deepfake generators are available to download on both Apple's app store and Google's Play store, expressing frustration with these massive platforms' inability to enforce such content.

"The problem with apps is that they have this dual-use front where they present on the app store as a fun way to face swap, but then they are marketing on Meta as their primary purpose being nudification. So when these apps come up for review on the Apple or Google store, they don't necessarily have the wherewithal to ban them," he said.

"There needs to be cross-industry cooperation where if the app or the website markets itself as a tool for nudification on any place on the web, then everyone else can be like, 'All right, I don't care what you present yourself as on my platform, you're gone,'" Mantzarlis added.

CBS News has reached out to both Apple and Google for comment as to how they moderate their respective platforms. Neither company had responded by the time of writing.

Major tech companies' promotion of such apps raises serious questions about both user consent and about online safety for minors. A CBS News analysis of one "nudify" website promoted on Instagram showed that the site did not prompt any form of age verification prior to a user uploading a photo to generate a deepfake image.

Such issues are widespread. In December, CBS News' 60 Minutes reported on the lack of age verification on one of the most popular sites using artificial intelligence to generate fake nude photos of real people.

Despite visitors being told that they must be 18 or older to use the site, and that "processing of minors is impossible," 60 Minutes was able to immediately gain access to uploading photos once the user clicked "accept" on the age warning prompt, with no other age verification necessary.

Data also shows that a high percentage of underage teenagers have interacted with deepfake content. A March 2025 study conducted by the children's protection nonprofit Thorn showed that among teens, 41% said they had heard of the term "deepfake nudes," while 10% reported personally knowing someone who had had deepfake nude imagery created of them.

Emmet Lyons is a news desk editor at the CBS News London bureau, coordinating and producing stories for all CBS News platforms. Prior to joining CBS News, Emmet worked as a producer at CNN for four years.

Cbs News